Serverless computing is no longer a buzz term in the field of Information Technology following the gradual migration of industries and even startups towards the idea of dynamic resource management. Companies typically invest a good portion of their budget & manpower towards maintaining and upgrading servers that host different functionalities of an application. In fact, server maintenance is posed as an important and mandatory step since it’s necessary for the services to keep up with the ever-rising demand in customer base and workload without any downtime to the end-user interface. However, the advent of serverless computing has completely eliminated the need for companies such as Netflix and Codepen to rely on server maintenance as it is handled by third-party providers.

What is Serverless Architecture?

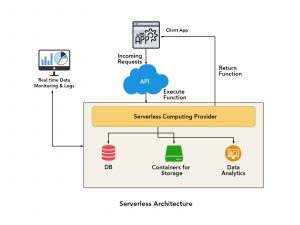

The name “Serverless” doesn’t necessarily imply that there are zero servers involved. Conversely, it indicates that product owners do not need to worry about provisioning or maintaining a server. In simple terms, serverless computing aids developers to run code without the need to provision or maintain either physical or virtual servers. Server maintenance is taken care of by third-party vendors with scalable architecture such as Amazon Lambda, Google Cloud, and Microsoft Azure. The idea is to provide continuous scaling of services without the need to monitor/maintain the resources. Developers are only required to build their code and upload it to the serverless architecture (e.g. Amazon Lambda or Azure/Google Function). Running the services & auto-scaling of instances are automatically taken care of by the selected third-party events. The events are triggered by any external HTTP requests (or any other AWS services) through API gateways.

The event-driven approach is susceptible to varying incoming workloads and creates a real-time responsive architecture. This enables companies to reduce costs and utilize the workforce towards improving their product features. Serverless computing is extremely advantageous to startups given that it constitutes a pay-as-you-go model that charges only for the resources consumed during the computing time. This means if your code stays ideal no cost would be incurred.

Why prefer Serverless over Microservices?

The microservices-based model is characterized by the idea that business functionalities are split into multiple stable and asynchronous individual services with complete ownership provided to the respective developers. With the least fault tolerance, microservices do come with a set of advantages such as technological flexibility, scalability, and consumption-based billing. However, Infrastructure as a Service (IaaS) entails constant management overhead to ensure that they are up-to-date via patching cycles and maintaining backups.

With respect to the serverless model, each action of the application is viewed as separate functions decoupled from each other. This is highly advantageous because the functions themselves can scale up depending on the workload. One of the most popular use cases is that CI/CD (Continuous Integration & Deployment) helps in deploying code and bug fixes in smaller increments on a daily basis. Serverless architecture can automate most of these tasks by having the code check-in as a trigger. Unlike microservices, creating builds and automatic rollbacks in case of any issues can be carried out without any direct management using serverless computing.

Serverless Architecture Use Cases

What is the right time to migrate to a serverless architecture? A few popular use cases that can define an organization’s need to adopt the FaaS model are as follows:

- IoT Support: A serverless architecture that can aggregate data from different devices, compute & analyze them and trigger respective functions can provide a more scalable and low-budget solution for industries. Serverless backends can be customized to handle mobile, web, and third-party API requests.

- Image & Video Processing: Image processing has emerged as an early frontrunner to adapt the serverless approach, especially the organizations dealing with facial and image recognition. In a 2016 conference, IBM demonstrated the use of its own serverless tool OpenWhisk, by using a drone to capture aerial pictures that were then subjected to cognitive analysis through custom APIs. More practical use cases of IBM’s OpenWhisk include surveying agricultural fields, detecting flood-affected areas, search and rescue operations & conducting infrastructure inspections.

- Hybrid Cloud Vendors: Enterprises possess varying needs with respect to cloud requirements and there are several providers in the market with different services. They tend to utilize the strongest services from each vendor making the application dependent on multiple third-party tools. However, serverless computing makes it possible to deploy any cloud providers of our preference and connect them using custom APIs.

Benefits of adopting the serverless architecture

- Event-driven functions to execute business logic.

- Pay-as-you-go model that cuts major costs related to infrastructure maintenance.

- Reduced time to market, thereby enabling faster code deployment.

- Auto-scaling architecture with on-demand availability.

- Faster disaster recovery and rollback options to ensure that the user interface operates 24×7.

The serverless model is in fact a brilliant concept that uses the cloud to its maximum potential without requiring infrastructure management. Given the evolution of market dynamics at a significantly accelerated pace, organizations are forced to keep up with the current trends and serverless computing acts as a catalyst for achieving that goal. Although the adoption of serverless computing entails a set of challenges, organizations are rapidly adopting the FaaS model due to its significant benefits, and this is projected to increase over time.

The advent of various tools has further considerably simplified the development of serverless applications. Specifically, in the case of AWS, CloudFormation templates written in YAML constitute the basis via which services are specified for deployment although it could potentially become unmanageable. Tools, such as AWS Amplify, further simplify the same to enable developers to focus on their jobs while the tool focuses on deployment.