What is Performance Testing?

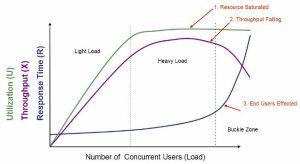

Performance testing of an application is basically the process of understanding how the web application and its operating environment respond at various user load levels. In general, we want to measure the latency, throughput, and utilization of the website while simulating attempts by virtual users to simultaneously access the site. One of the main objectives of performance testing is to maintain a website with low latency, high throughput, and low utilization.

The performance test measures how well the application meets the customer expectations in terms of,

- Speed – determines if the application responds quickly

- Scalability – determines how much user load the application can handle

- Stability – determines if the application is stable under varying loads

Why Performance Testing?

Performance problems are usually the result of contention for, or exhaustion of, some system resource. When a system resource is exhausted, the system is unable to scale to higher levels of performance. Maintaining optimum Web application performance is a top priority for application developers and administrators.

Performance analysis is also carried out for various purposes such as:

- During a design or redesign of a module or a part of the system, more than one alternative presents itself. In such cases, the evaluation of a design alternative is the prime mover for an analysis.

- Post-deployment realities create a need for tuning the existing system. A systematic approach like performance analysis is essential to extract maximum benefit from an existing system.

- Identification of bottlenecks in a system is more of an effort at troubleshooting. This helps to replace and focus efforts on improving overall system response.

- As the user base grows, the cost of failure becomes increasingly unbearable. To increase confidence and to provide an advance warning of potential problems in case of load conditions, the analysis must be done to forecast performance under load.

Typically to debug applications, developers would execute their applications using different execution streams (i.e., completely exercise the application) in an attempt to find errors. When looking for errors in the application, performance is a secondary issue to features; however, it is still an issue.

Objectives of Performance Testing

- End-to-end transaction response time measurements.

- Measure the Application Server component’s performance under various loads.

- Measure database components’ performance under various loads.

- Monitor system resources under various loads.

- Measure the network delay between the server and the clients

Performance Testing Approach

-

Identify the Test Environment

Identify the physical test environment and the production environment as well as the tools and resources available to the test team. The physical environment includes hardware, software, and network configurations. Having a thorough understanding of the entire test environment at the outset enables more efficient test design and planning and helps you identify testing challenges early in the project. In some situations, this process must be revisited periodically throughout the project’s life cycle.

-

Identify Performance Acceptance Criteria

Identify the response time, throughput, and resource utilization goals and constraints. In general, response time is a user concern, throughput is a business concern, and resource utilization is a system concern. Additionally, identify project success criteria that may not be captured by those goals and constraints; for example, using performance tests to evaluate what combination of configuration settings will result in the most desirable performance characteristics.

-

Plan and Design Tests

Identify key scenarios, determine variability among representative users and how to simulate that variability, define test data, and establish metrics to be collected. Consolidate this information into one or more models of system usage to be implemented, executed, and analyzed.

-

Configure the Test Environment

Prepare the test environment, tools, and resources necessary to execute each strategy as features and components become available for test. Ensure that the test environment is instrumented for resource monitoring as necessary.

-

Implement the Test Design

Develop the performance tests in accordance with the test design.

-

Execute the Test

Run and monitor your tests. Validate the tests, test data, and results collection. Execute validated tests for analysis while monitoring the test and the test environment.

-

Analyze Results, Report, and Retest

Consolidate and share results data. Analyze the data both individually and as a cross-functional team. Reprioritize the remaining tests and re-execute them as needed. When all of the metric values are within accepted limits, none of the set thresholds have been violated, and all of the desired information has been collected, you have finished testing that particular scenario on that particular configuration.

Functions of a Typical Tool

- Record & Replay:

- Record the application workflow and playback the script to verify the recording.

- Execute:

- Run the fully developed Test Script for a stipulated number of Virtual users to generate load on the AUT (Application Under Test)

- The dashboard displays the values for the desired parameters

- Remote connects to the app/web servers (Linux/Windows); gathers resource utilization data

- Analyze:

- Generates the report; helps to analyze the results and troubleshoot the issues.

Attributes Considered for Performance Testing

The following are the only few attributes out of many that were considered during performance testing:

- Throughput

- Response Time

- Time {Session time, reboot time, printing time, transaction time, task execution time}

- Hits per second, Request per second, Transaction per seconds

- Performance measurement with a number of users.

- Performance measurement with other interacting applications or task

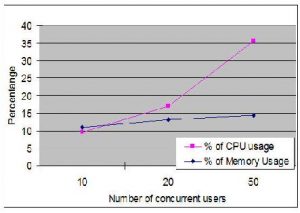

- CPU usage

- Memory usage {Memory leakages, thread leakage}

- All queues and IO waits

- Bottlenecks {Memory, cache, process, processor, disk, and network}

- Highly Iterative Loops in the Code

- Data not optimally aligned in Memory

- Poor structuring of Joins in SQL queries

- Too many static variables

- Indexes on the Wrong Columns; Inappropriate combination of columns in Composite Indexes

- Network Usage {Bytes, packets, segments, frames received and sent per sec, Bytes

- Total/sec, Current Bandwidth Connection Failures, Connections Active, failures at network interface lever and protocol level}

- Database Problem {Settings and configuration, Usage, Read/sec, Write/sec, any locking, queries, compilation error}

- Web server {request and response per second, services succeeded and failed, serve problem if any}

- Screen transition

Throughput and Response time with different user loads

CPU and Memory Usage with different user loads

Have questions? Contact the software testing experts at InApp to learn more.