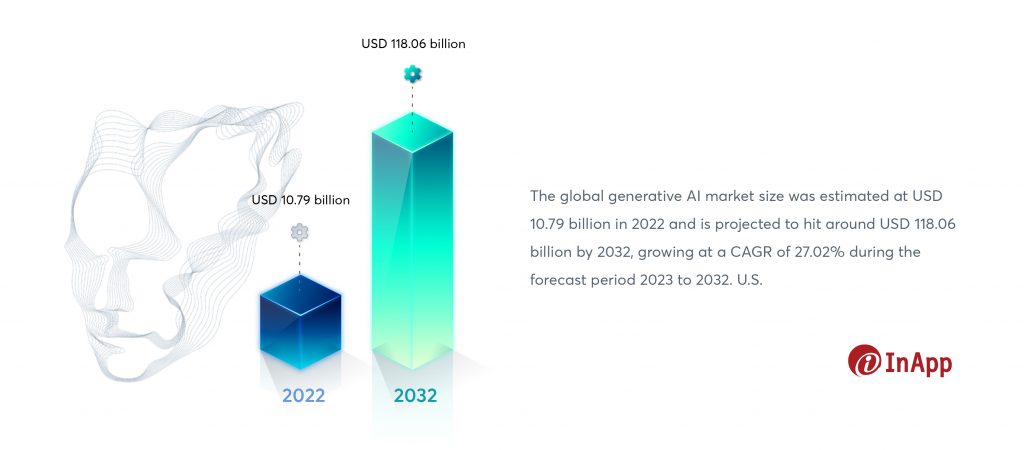

Generative AI, a subset of Artificial Intelligence (AI), has witnessed remarkable advancements in recent years, transforming the landscape across various industries. The latest McKinsey Global Survey on the current state of AI confirms the explosive growth of generative AI (gen AI). Industries such as healthcare, finance, entertainment, and manufacturing have embraced generative AI to streamline processes, enhance creativity, and revolutionize user experiences.

The rapid integration of generative AI across sectors raises security concerns, including data breaches, adversarial attacks, and ethical implications. Ensuring generative AI security is crucial for safeguarding sensitive information, maintaining model integrity, and preventing unintended consequences.

With generative AI playing a pivotal role in decision-making and customer interactions, the repercussions of security lapses extend beyond financial losses, impacting trust and reputation. A robust security framework is imperative to address the intersection of powerful AI capabilities and the potential for misuse.

Now that we’ve explored the overarching significance of securing generative AI, let’s delve into the specific steps needed to establish a robust foundation

Understanding Generative AI Security

Having established the fundamental principles, let’s now dive into the key concepts that underpin the security of generative AI models.

At its core, Generative AI Security involves the protection of generative AI models, algorithms, and the data they process. The security measures are designed to ensure the confidentiality, integrity, and availability of both the generative AI system and the data it interacts with. This includes safeguarding against unauthorized access, preventing data breaches, and mitigating the potential misuse of generative AI for malicious purposes.

Securing generative AI models is of paramount importance due to the intricate nature of these systems. As generative AI models become more sophisticated, they are capable of generating content that closely mimics human-created data. While this innovation opens doors to creative possibilities, it also introduces risks such as generating misleading or harmful content, which can have real-world consequences.

The significance of securing generative AI models is heightened by the potential risks and vulnerabilities associated with their deployment. Adversarial attacks, wherein malicious actors intentionally manipulate input data to mislead the generative AI model, pose a substantial threat. Without adequate security measures, generative AI systems may inadvertently produce biased or unethical outputs, impacting decision-making processes, public trust, and organizational reputation.

Securing Generative AI: Key Concepts

As we explore the foundational concepts, it’s essential to recognize the inherent risks and concerns associated with generative AI. These concepts not only fortify the integrity of the models but also pave the way for responsible and Ethical AI development.

- Model Robustness and Adversarial Resistance

At the heart of securing generative AI lies the crucial concept of model robustness. It involves fortifying AI models to withstand unforeseen challenges and adversarial manipulations. Imagine a generative AI model as a digital artisan crafting intricate designs. Ensuring its robustness is akin to providing the artisan with tools capable of discerning between genuine creative input and misleading alterations.

This resilience is vital in the face of adversarial attacks—deliberate attempts to trick the model into producing unintended outputs. The goal is to create a model that not only excels in generating accurate and desired content but also stands resilient against attempts to deceive or manipulate its decision-making processes.

- Privacy-Preserving Generative AI

As generative AI delves into realms where sensitive data intertwines with creative processes, the concept of privacy preservation becomes paramount. Picture a generative AI model working on personalized medical data or crafting content based on user-specific preferences. Privacy-preserving generative AI ensures that while the model extracts insights and generates content, it does so without compromising the confidentiality of the underlying data.

This involves employing cryptographic techniques and privacy-enhancing technologies to strike a delicate balance between innovation and data protection. It’s akin to allowing the generative AI to unfold its creative prowess within the confines of privacy, ensuring that the generated outputs don’t inadvertently reveal sensitive information about individuals.

In the intricate dance between technological advancement and ethical responsibility, understanding and implementing these key concepts becomes the cornerstone of securing generative AI models. It’s not merely about fortifying algorithms; it’s about crafting a future where AI innovation aligns harmoniously with ethical imperatives.

Generative AI is the most powerful tool for creativity that has ever been created. It has the potential to unleash a new era of human innovation.

Elon Musk

Risks & Concerns with Generative AI

Understanding the risks paves the way for addressing privacy challenges, a crucial aspect of responsible generative AI development. Here are some common challenges associated with generative AI:

1. Bias and Fairness

Generative AI models are trained on data, and if the training data contains biases, the model may perpetuate and even amplify these biases. This can lead to unfair and discriminatory outcomes, especially in applications involving decision-making.

2. Adversarial Attacks

Generative AI models are vulnerable to adversarial attacks, where malicious actors deliberately manipulate input data to deceive the model. These attacks can lead to the generation of incorrect or unintended outputs, posing threats in scenarios where the model is used for critical tasks.

3. Privacy Concerns

As generative AI often involves working with sensitive data, there are concerns about the privacy implications of generated content. In healthcare, for example, generating personalized medical information raises questions about the confidentiality and privacy of patient data.

4. Deepfake Proliferation

Generative AI can be used to create highly realistic deepfakes—AI-generated content that convincingly mimics real people or events. The proliferation of deepfakes raises concerns about misinformation, as it becomes challenging to distinguish between authentic and AI-generated content.

5. Security Risks

Insecure generative AI models may become targets for security breaches. If not adequately protected, these models can be exploited to generate harmful or malicious content, impacting users, organizations, or systems.

6. Ethical Considerations

The ethical implications of generative AI involve questions about the responsible use of technology. Understanding the boundaries of AI creativity and ensuring that AI-generated content aligns with ethical standards is a growing concern.

7. Regulatory Compliance

The evolving landscape of generative AI may outpace existing regulations. Ensuring compliance with ethical guidelines and legal frameworks becomes challenging, and organizations need to adapt to changing regulatory environments.

8. Unintended Consequences

Generative AI models may produce outputs with unintended consequences, especially when faced with novel or ambiguous situations. Ensuring that AI behaves in a predictable and controllable manner is a significant challenge.

Addressing these risks and concerns requires a multi-faceted approach, combining technical advancements, ethical considerations, and regulatory frameworks to foster the responsible development and deployment of generative AI.

Privacy in Generative AI

Generative AI, with its ability to craft personalized and contextually rich content, introduces a complex web of privacy challenges that demand careful consideration. As generative AI models delve into the intricacies of individual data, ethical concerns, and robust privacy measures become integral aspects of responsible development.

Generative AI often operates on vast datasets, including personal information, images, and behaviors. The challenge arises in balancing the innovation derived from utilizing such data with the need to protect individual privacy. In applications like personalized content generation or deepfake creation, there’s an inherent tension between the desire for customization and the imperative to safeguard sensitive information.

Strategies for Implementing Privacy Measures

Addressing privacy concerns in generative AI requires a proactive and comprehensive approach:

- Data Minimization and Anonymization

Limiting the amount of data used and removing personally identifiable information during the training phase helps mitigate privacy risks. Anonymizing data ensures the generated content cannot be traced back to specific individuals.

- Differential Privacy Techniques

Leveraging differential privacy techniques adds noise to the training data, preventing the model from memorizing specific data points. This protects individual privacy by making it harder to reverse-engineer sensitive information.

- User Consent and Transparency

Implementing clear user consent mechanisms and providing transparency about how generative AI models use data are essential. Users should be informed about the purposes of data usage and can opt in or out.

- Secure Data Storage and Transfer

Robust security measures for storing and transferring data are imperative. Encryption protocols and secure data handling practices protect sensitive information throughout the generative AI lifecycle.

Navigating the fine line between innovation and privacy in generative AI demands a judicious blend of technological safeguards and ethical considerations. By implementing these strategies, developers can forge a path that not only harnesses the creative potential of generative AI but also upholds individual privacy rights.

Ways to Secure Generative AI

As the creative capabilities of AI models continue to advance, the need for a robust security framework becomes paramount. This section delves into a comprehensive framework encompassing seven key strategies to ensure the security of generative AI.

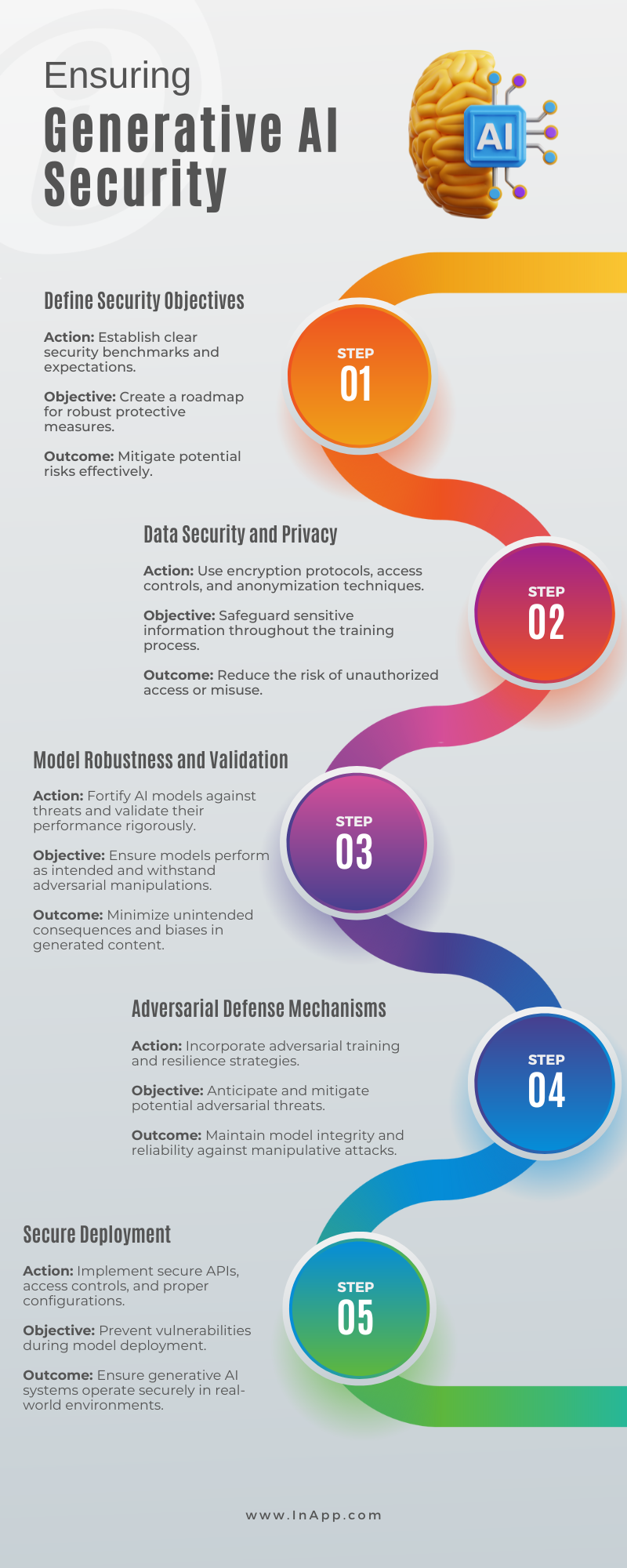

1. Define Security Objectives

Establishing clear security objectives is the foundational step in ensuring generative AI security. This involves precisely defining the goals and criteria that determine the security posture of AI models. By outlining specific security benchmarks and expectations, developers can create a roadmap for implementing robust protective measures and mitigating potential risks.

2. Data Security and Privacy

The security and privacy of training data are paramount in generative AI security. This involves implementing encryption protocols, access controls, and anonymization techniques to safeguard sensitive information. By ensuring that data remains confidential and protected throughout the training process, the risk of unauthorized access or misuse is significantly reduced.

3. Model Robustness and Validation

Enhancing model robustness and validation techniques is crucial for fortifying generative AI against potential threats. Robust models are better equipped to withstand adversarial manipulations and unexpected inputs. Rigorous validation processes ensure that the AI model performs as intended, reducing the likelihood of unintended consequences or biases in the generated content.

4. Adversarial Defense Mechanisms

Strategies for defending against adversarial attacks form a critical layer of generative AI security. This involves incorporating techniques such as adversarial training, where models are exposed to manipulated data during training to increase resilience. By anticipating and mitigating potential adversarial threats, generative AI models can maintain their integrity and reliability.

5. Secure Deployment

Guidelines for securely deploying generative AI models are essential to prevent vulnerabilities during implementation. Secure deployment encompasses considerations like access controls, secure APIs, and proper configuration to minimize the risk of unauthorized access or exploitation. This phase ensures that the generative AI system operates securely in real-world environments.

6. Continuous Monitoring and Updates

The importance of ongoing monitoring and updates in the dynamic landscape of generative AI security cannot be overstated. Continuous monitoring allows for detecting anomalies or emerging threats, enabling prompt responses. Regular updates, incorporating the latest security patches and advancements, ensure that the generative AI system remains resilient against evolving risks.

7. User Education and Awareness

Educating users and stakeholders about the security measures in place is a proactive strategy for making sure generative AI is secure and building a resilient generative AI ecosystem. By fostering awareness, users can understand the potential risks, adhere to security protocols, and contribute to the overall security posture. This education empowers stakeholders to make informed decisions and actively participate in maintaining a secure AI environment.

Prioritizing Security in Generative AI Development

Embarking on the journey of generative AI development without a steadfast commitment to security is akin to setting sail without a compass. Each outlined step, from defining security objectives to continuous monitoring, plays a pivotal role in fortifying generative AI models against potential risks. The importance of these steps extends beyond mere precaution; it define the ethical and responsible deployment of AI in a world increasingly shaped by its transformative capabilities.

Integrate these steps into your generative AI projects with diligence and foresight. By doing so, you contribute not only to the robustness of individual models but also to the broader ethical framework surrounding AI innovation.

Extend the conversation beyond this platform. Share this article with your network and express your views on generative AI security. Connect with us on social channels for continuous updates and engaging discussions.

Frequently Asked Questions

What are the primary security concerns associated with the rapid integration of generative AI across industries?

The primary security concerns with the rapid integration of generative AI include potential misuse for deepfake creation, amplification of cyber threats through automated attacks, and the risk of biased or manipulated outputs influencing critical decision-making processes. Organizations must implement robust cybersecurity measures and ethical guidelines to mitigate these risks and ensure responsible AI deployment.

What strategies can be employed for privacy-preserving generative AI, especially when working with sensitive data?

Employ techniques like federated learning, where the model is trained across decentralized devices without sharing raw data. Use differential privacy to add noise to individual data points, preserving overall patterns while safeguarding specifics. Implement homomorphic encryption for secure computation on encrypted data. Regularly audit and update privacy protocols, ensuring compliance with regulations.