The year 2017 has witnessed an explosion of Machine Learning (ML) applications across many industries. Machine Learning is the field of study under artificial intelligence that gives computers the ability to learn without being explicitly programmed. It uses 3 types of algorithms for learning – Supervised Learning, Unsupervised Learning, and Reinforcement Learning. To know more you can refer to one of our infographics on Machine Learning. The idea behind ML is to collect more and more data, learn the pattern, train a model, analyze it, and then tune the data until a satisfactory result is generated.

The application of Machine Learning has extended across industries like manufacturing, e-commerce, financial services, and energy. Many tools have been developed for using Machine Learning like Apache Singa, Apache Spark MLlib, Oryx 2, Amazon Machine Learning (AML), etc. As the study of ML is getting matured, the tools are also getting more standardized, robust, and scalable. TensorFlow (TF) is one such tool that has made its own space for ML applications. TensorFlow was initially developed by Google’s Brain Team as an internal tool for their products and then was released under Apache’s open-source license in 2015. For every major project in Google, close to 6000 of them are using Tensorflow in some form or other. Rather than a tool for ML, TensorFlow is used as a Deep Learning toolkit, which means, unlike the basic ML algorithms, deep learning is a broader family of ML methods that tend to mimic how our brains process information.

TensorFlow Overview

Ever since Google open-sourced TensorFlow, it has gained popularity and wide acceptance among the research community and forums like Github, and Stack Overflow. To understand it better, let’s look at its components and the programming paradigm behind TensorFlow.

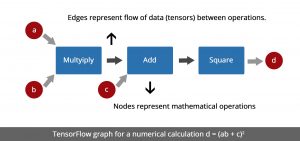

The main concept in TensorFlow is that numerical calculations are expressed as graphs and graphs are made of tensors (data) and nodes (mathematical operations). Tensors are multidimensional arrays or multilinear functions consisting of various vector variables which flow between these nodes and hence the name. These variables are user-defined and the actual calculation happens when data is fed into the tensor. Nodes represent the mathematical operations and accept any number of inputs but have a single output.

Fig 1: A Graph in TensorFlow

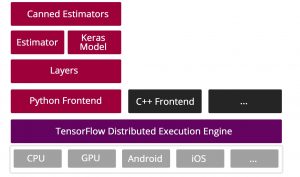

One of the advantages of TensorFlow is that it is a low-level toolkit which means it is portable and scalable on a lot of platforms. The code can run on CPU, GPU (Graphical Processing Units), mobile devices, and TPU ( Tensor Processing Units, which are Google’s dedicated TensorFlow processors). Above the platform layer is the Distributed Execution Engine which executes the graph of operations and distributes it among the processors. Initially, Python was the only language used to build the graphs, but once Tensorflow was open-sourced, community support increased to include other languages such as C++, Java, Haskell, Go, Julia, C#, and R.

Fig 2: TensorFlow Architecture (Picture Courtesy TensorFlow.org)

The heavy graph orientation of TensorFlow is one of its greatest strengths. However, people find it difficult to code in TF directly. To circumvent this low-level functionality, TensorFlow has some libraries and API layers that make it easier for users to work on models. The Layers API of TensorFlow allows users to construct the models without having to work on the graphs and operations directly. Another is the Estimator and Keras which are used to train and evaluate models. The Canned Estimators were included in the 1.3 version of Tensorflow and are basically high-level API that allows users to build on models with minimum coding. Unlike other ML frameworks like Theano, Torch, etc, the high-level APIs of TensorFlow allow building deep learning solutions an accessible and easy task for everyone

One of the other latest developments in TensorFlow is the developer preview of TensorFlow Lite in November 2017. These are designed as lighter-weight machine learning models to run quickly on mobile and embedded devices. The project is still under active development the scope of how this evolves is something the community will have to experiment with and see.